|

Lang Cao Hi! 👋 Here is Lang Cao, a PhD student in Computer Science at the University of Illinois Urbana-Champaign (UIUC), happily working with Prof. Guo Yue. I interned at Microsoft Research for about one year, where I had a wonderful experience and was fortunate to collaborate with Haoyu Dong and Mengyu Zhou. I also received my Master's degree in Computer Science from UIUC and earned my Bachelor's degree from Wuhan University of Technology. I am an open researcher. My research primarily focuses on artificial intelligence (AI), particularly large language models (LLMs) and their applications. Beyond AI research, I'm also an active practitioner in Web3 and quantitative trading. |

|

ResearchMy academic research focuses on large language models (LLMs), with an emphasis on improving their reasoning capabilities, building more effective LLM agents for complex tasks, and exploring their potential in healthcare applications. My background is mainly in natural language processing (NLP) and machine learning (ML). My previous research experience spans various areas, including LLM Reasoning, LLM & RAG, LLM for Table Understanding, AI for Health, LLM Agent, NLP Applications, as well as other topics related to LLMs and ML. Selected research projects are listed below. |

|

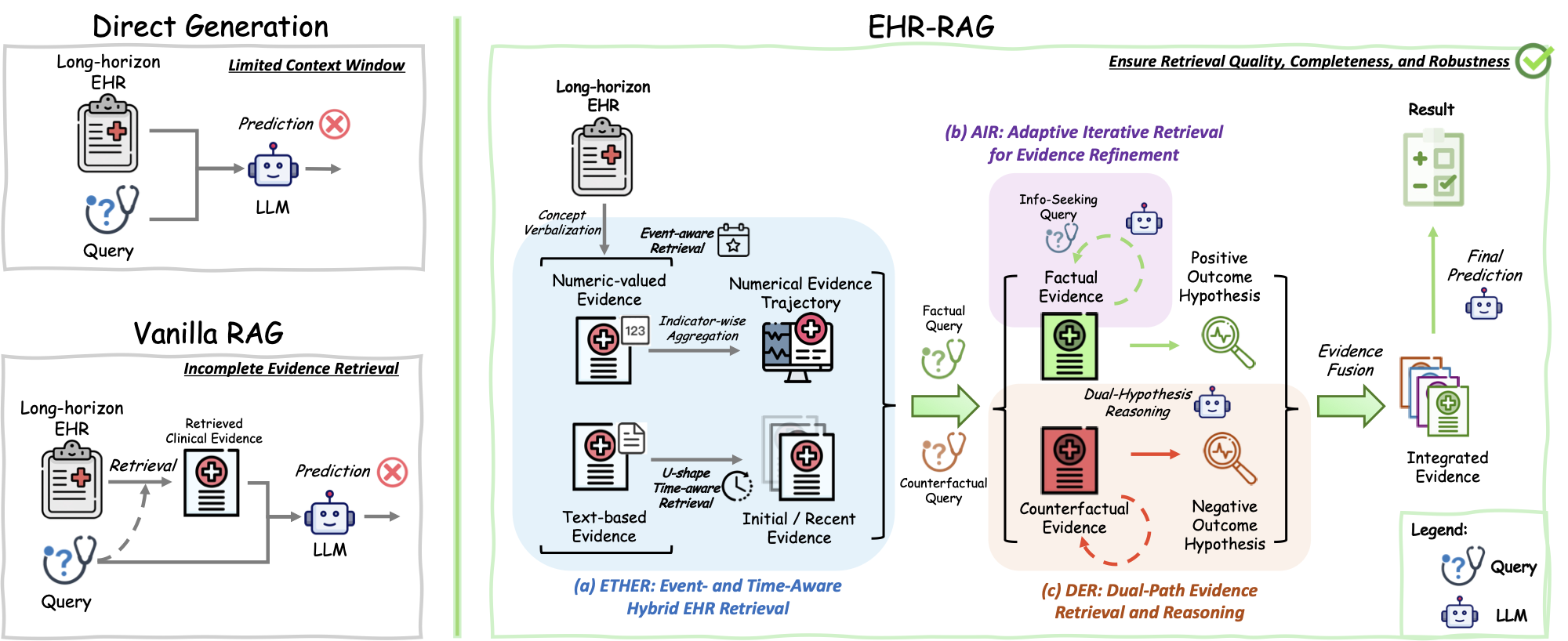

EHR-RAG: Bridging Long-Horizon Structured Electronic Health Records and Large Language Models via Enhanced Retrieval-Augmented Generation

Lang Cao, Qingyu Chen, Yue Guo Preprint, 2026 bibtex / paper / code [AI for Health] EHR-RAG is a retrieval-augmented framework with time-aware, dual-path, and iterative retrieval, specifically designed for structured EHR data, enabling LLMs to reason effectively over long-horizon patient records. |

|

|

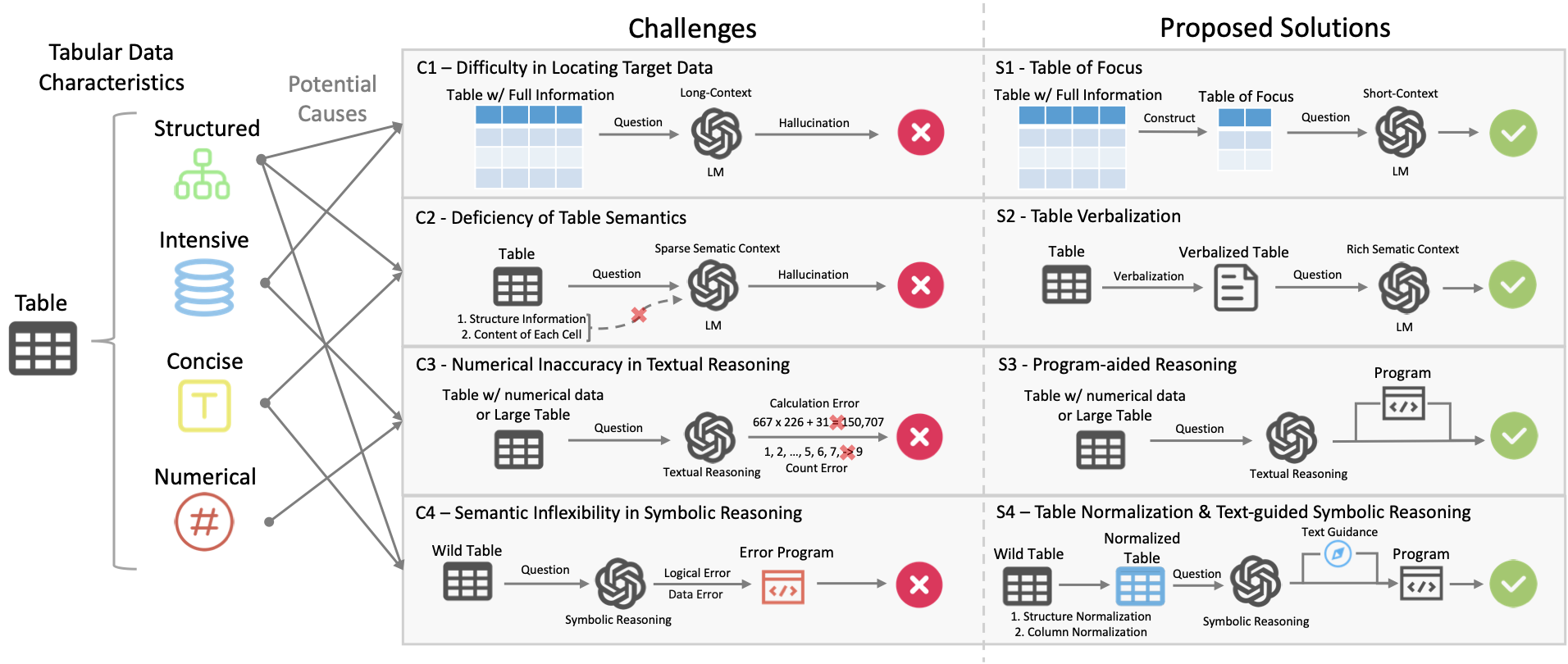

TableMaster: A Recipe to Advance Table Understanding with Language Models

Lang Cao, Hanbing Liu ICLR'26, 2026 bibtex / paper / code [LLM for Table Understanding] TableMaster analyzes the challenges of table understanding with language models and provides a comprehensive recipe and framework to address them. |

|

|

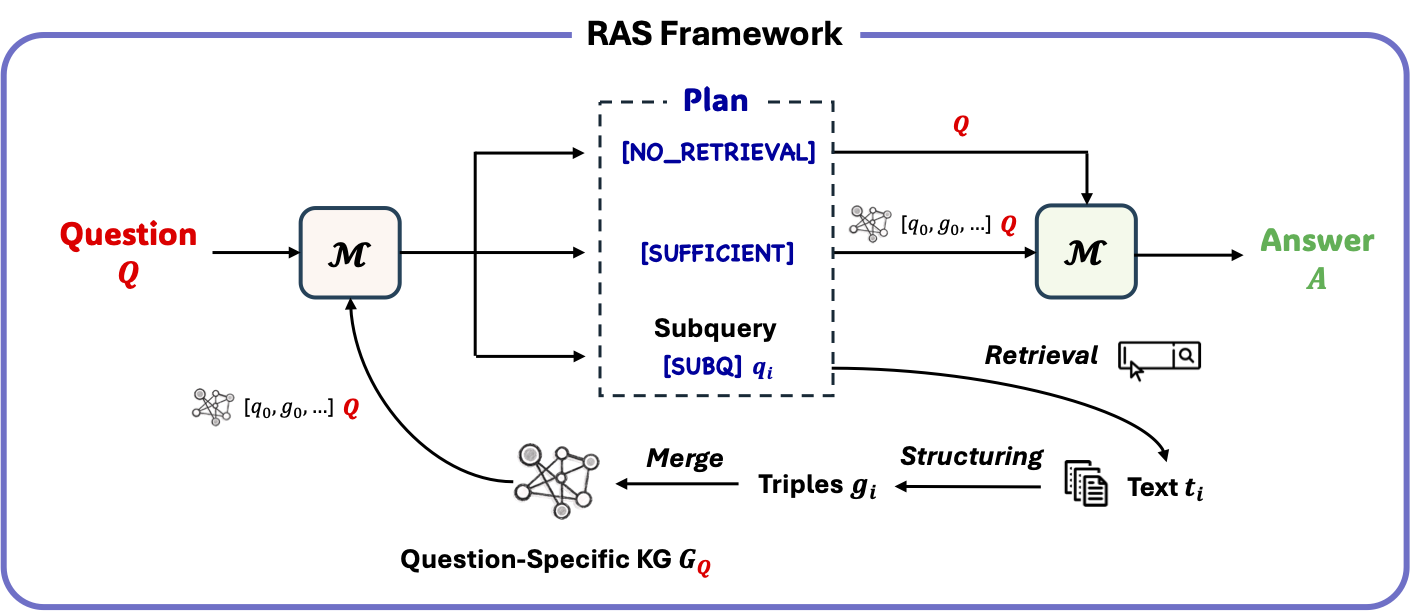

RAS: Enhanced Knowledge-Intensive LLM Generation with Iterative Retrieval-And-Structure

Pengcheng Jiang, Lang Cao, Ruike Zhu, Minhao Jiang, Yunyi Zhang, Jiaming Shen, Jimeng Sun, and Jiawei Han ICLR'26, 2026 bibtex / paper / code [LLM for Table Understanding] RAS is a retrieval-and-structuring framework that iteratively plans queries, converts retrieved text into structured triples, and builds question-specific knowledge graphs to enable LLMs to perform more accurate and robust multi-step reasoning on knowledge-intensive tasks. |

|

|

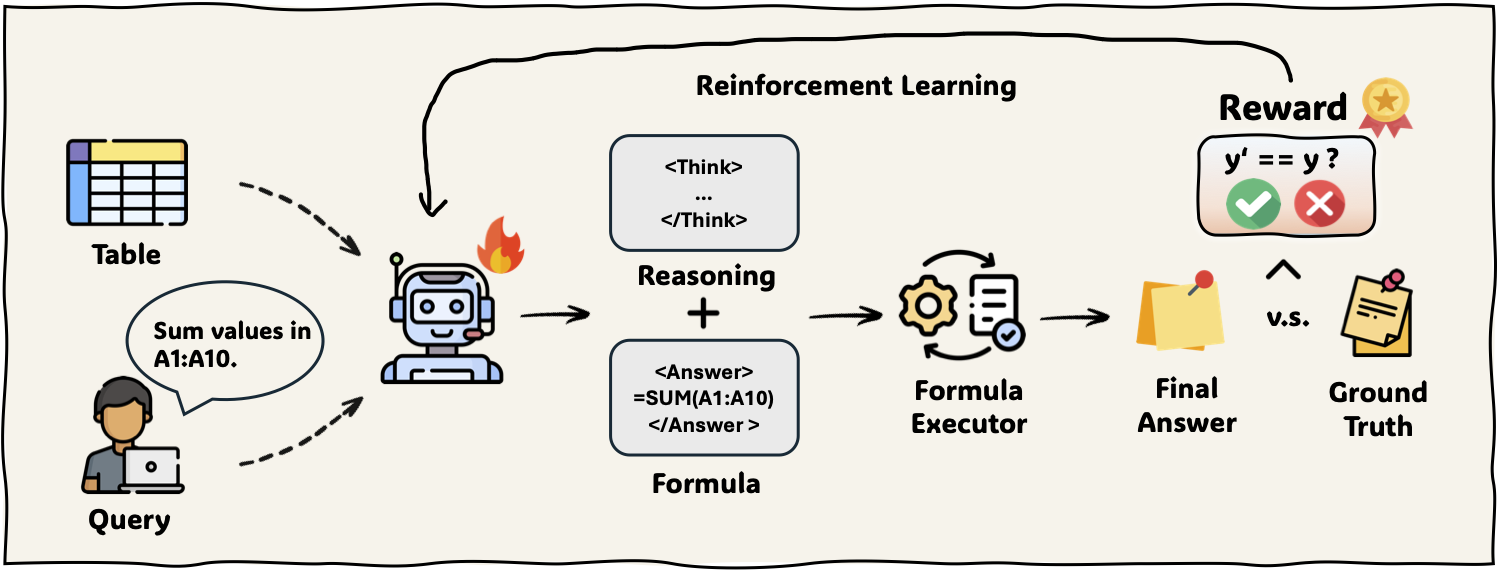

Fortune: Formula-Driven Reinforcement Learning for Symbolic Table Reasoning in Language Models

Lang Cao, Jingxian Xu, Hanbing Liu, Jinyu Wang, Mengyu Zhou, Haoyu Dong, Shi Han, Dongmei Zhang Preprint, 2025 bibtex / paper / code [LLM for Table Understanding] Formula Tuning (Fortune) is a reinforcement learning approach that enables language models to perform symbolic table reasoning by deriving executable spreadsheet formulas. |

|

|

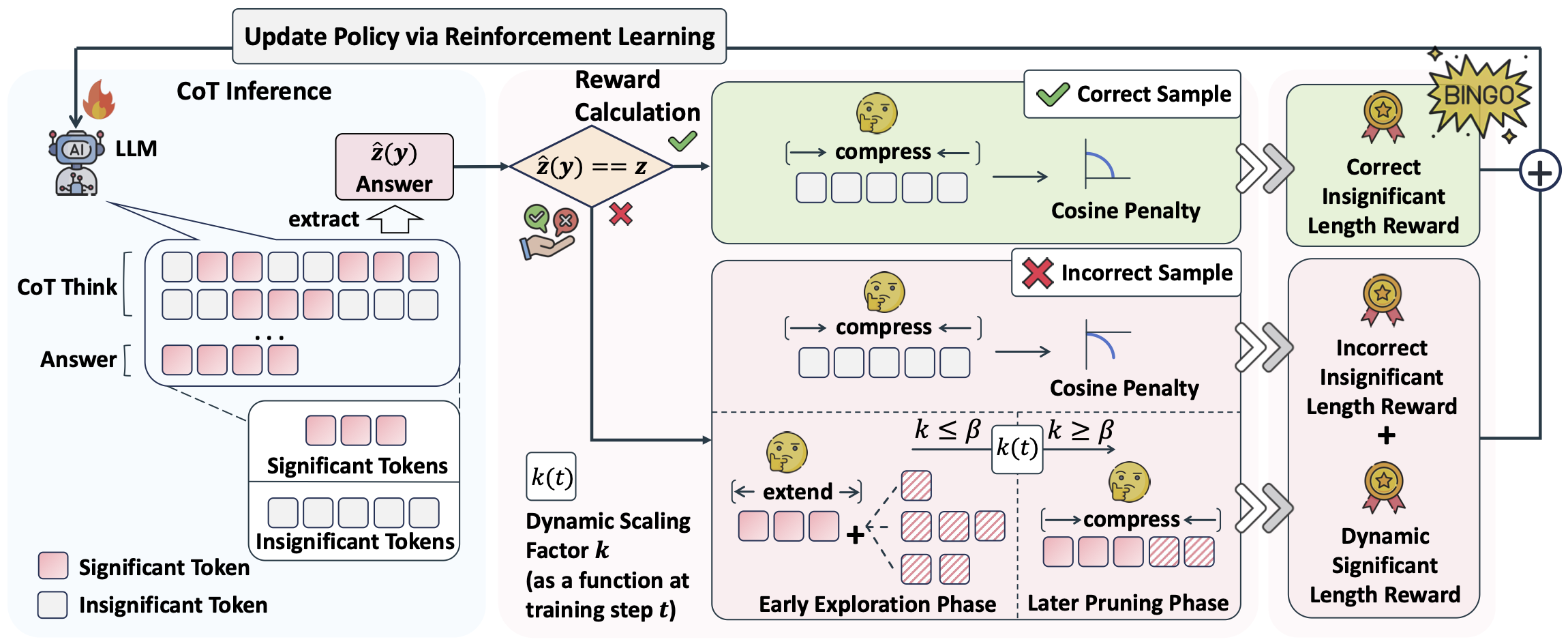

Bingo: Boosting Efficient Reasoning of LLMs via Dynamic and Significance-based Reinforcement Learning

Hanbing Liu, Lang Cao, Yuanyi Ren, Mengyu Zhou, Haoyu Dong, Xiaojun Ma, Shi Han, Dongmei Zhang Preprint, 2025 bibtex / paper / code [LLM Reasoning] Bingo is a reinforcement learning framework that trains LLMs for efficient reasoning by combining significance-aware and dynamic length rewards, improving both accuracy and efficiency across reasoning benchmarks. |

|

|

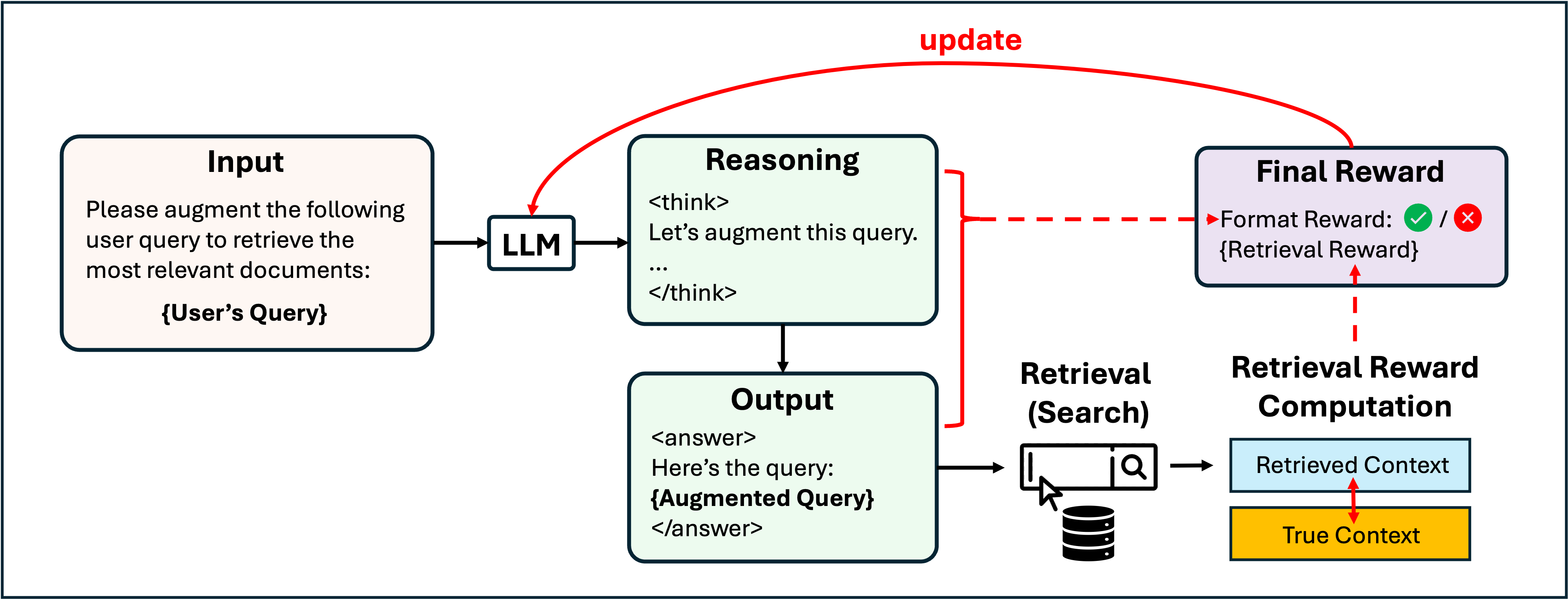

DeepRetrieval: Hacking Real Search Engines and Retrievers with Large Language Models via Reinforcement Learning

Pengcheng Jiang, Jiacheng Lin, Lang Cao, Runchu Tian, SeongKu Kang, Zifeng Wang, Jimeng Sun, Jiawei Han COLM'25, 2025 bibtex / page / paper / code [LLM Others] DeepRetrieval is a framework that leverages reinforcement learning to hack real-world search engines and retrievers by training LLMs to perform effective retrieval tasks. |

|

|

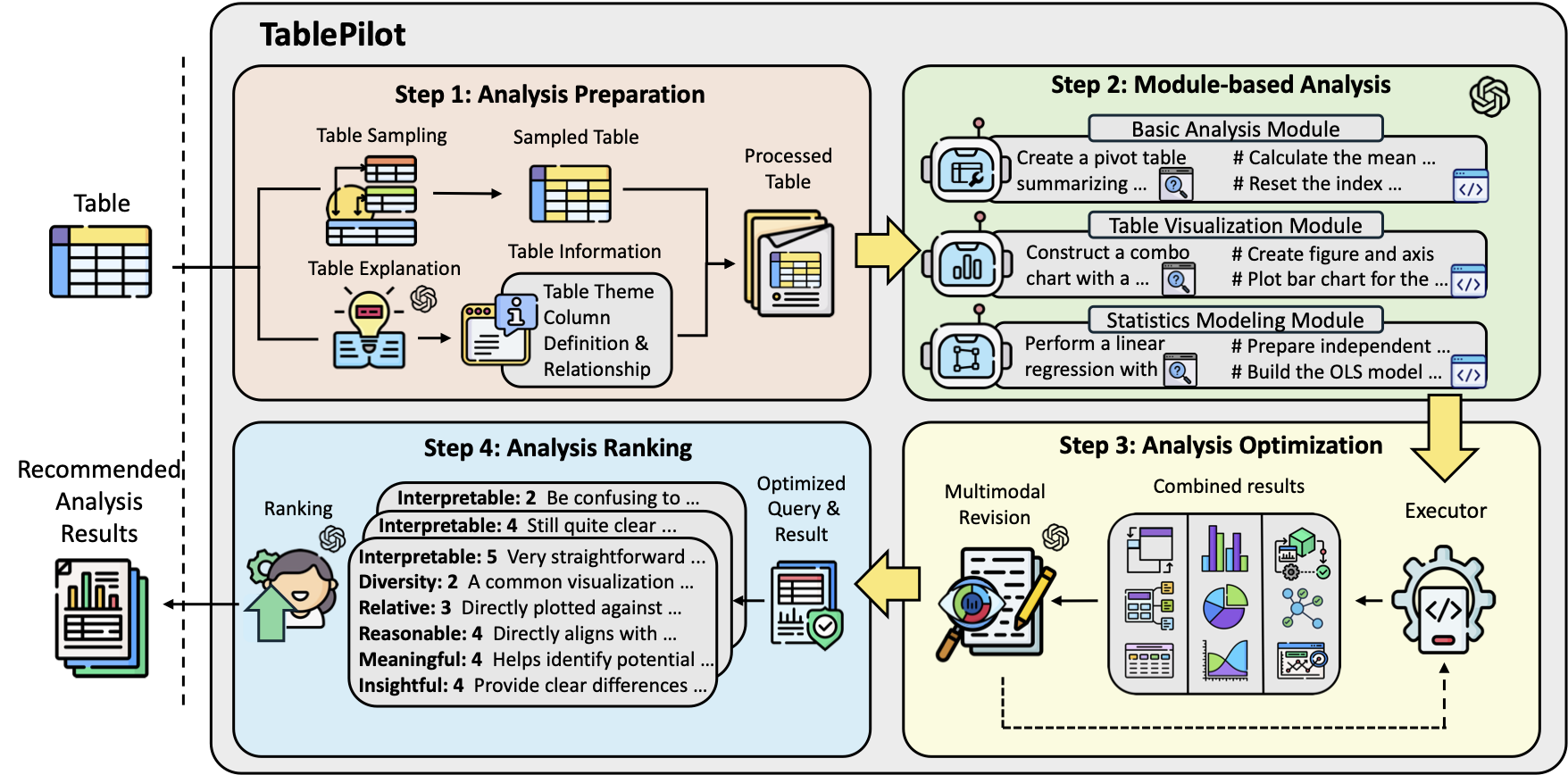

TablePilot: Recommending Human-Preferred Tabular Data Analysis with Large Language Models

Deyin Yi, Yihao Liu, Lang Cao, Mengyu Zhou, Haoyu Dong, Shi Han, Dongmei Zhang ACL'25 Industry Track (Oral), 2025 bibtex / paper / poster / code [LLM for Table Understanding] TablePilot is a framework based on LLMs; when given an input table, it outputs recommended data analysis queries along with their corresponding code and results. |

|

|

A foundation model for human-AI collaboration in medical literature mining

Zifeng Wang, Lang Cao, Qiao Jin, Joey Chan, Nicholas Wan, Behdad Afzali, Hyun-Jin Cho, Chang-In Choi, Mehdi Emamverdi, Manjot K. Gill, Sun-Hyung Kim, Yijia Li, Yi Liu, Yiming Luo, Hanley Ong, Justin F. Rousseau, Irfan Sheikh, Jenny J. Wei, Ziyang Xu, Christopher M. Zallek, Kyungsang Kim, Yifan Peng, Zhiyong Lu, Jimeng Sun Nature Communications, 2025 bibtex / paper / code [AI for Health] LEADS is a specialized foundation model for medical literature mining that outperforms generic LLMs across multiple tasks and significantly improves accuracy and efficiency in expert workflows for evidence-based medicine. |

|

|

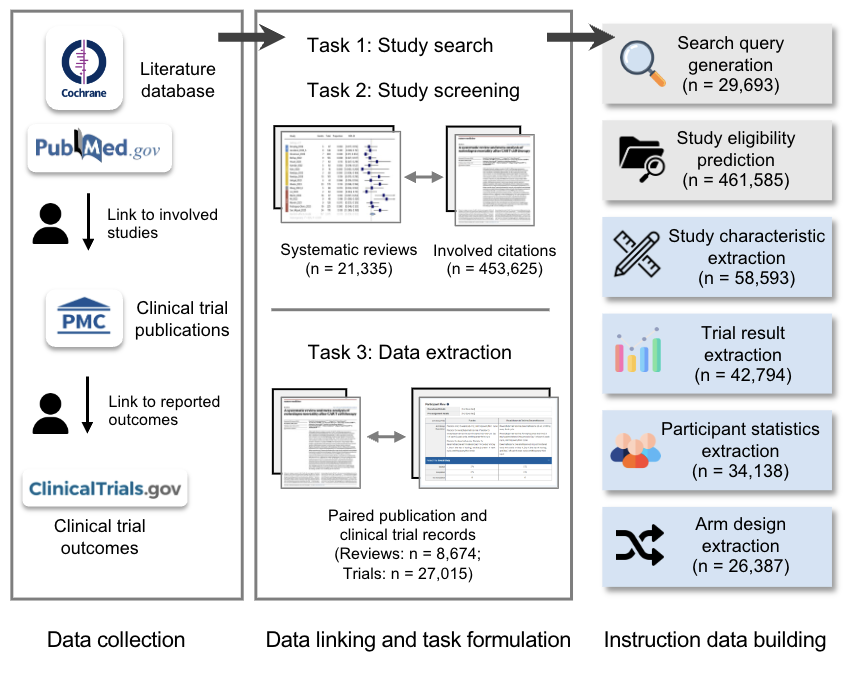

Accelerating clinical evidence synthesis with large language models

Zifeng Wang, Lang Cao, Benjamin Danek, Qiao Jin, Zhiyong Lu, Jimeng Sun npj Digital Medicine, 2025 bibtex / paper / code [AI for Health] TrialMind is a specialized generative AI system for clinical evidence synthesis that surpasses general LLMs in search, screening, and data extraction, significantly enhancing accuracy and efficiency in expert-driven systematic reviews. |

|

|

KG-FIT: Knowledge Graph Fine-Tuning Upon Open-World Knowledge

Pengcheng Jiang, Lang Cao, Cao Xiao, Parminder Bhatia, Jimeng Sun, Jiawei Han NeurIPS'24, 2024 bibtex / paper / code [LLM Others] KG-FIT enhances knowledge graph embeddings by integrating LLM-guided hierarchical semantics with KG structure, achieving significant performance gains in link prediction across multiple benchmarks. |

|

|

Learn to Refuse: Making Large Language Models More Controllable and Reliable through Knowledge Scope Limitation and Refusal Mechanism

Lang Cao EMNLP'24 Main Conference, 2024 bibtex / paper / code [LLM Generation] Learn to Refuse (L2R) is a method empowering large language models to refuse answering difficult questions, thereby improving accuracy and reliability by utilizing a separate, expandable knowledge base. |

|

|

GraphReason: Enhancing Reasoning Capabilities of Large Language Models through A Graph-Based Verification Approach

Lang Cao ACL'24 Natural Language Reasoning and Structured Explanations Workshop, 2024 bibtex / paper / code [LLM Reasoning] GraphReason is a graph-based verification approach that enhances the reasoning capabilities of large language models by verifying the reasoning process through a graph-based framework. |

|

|

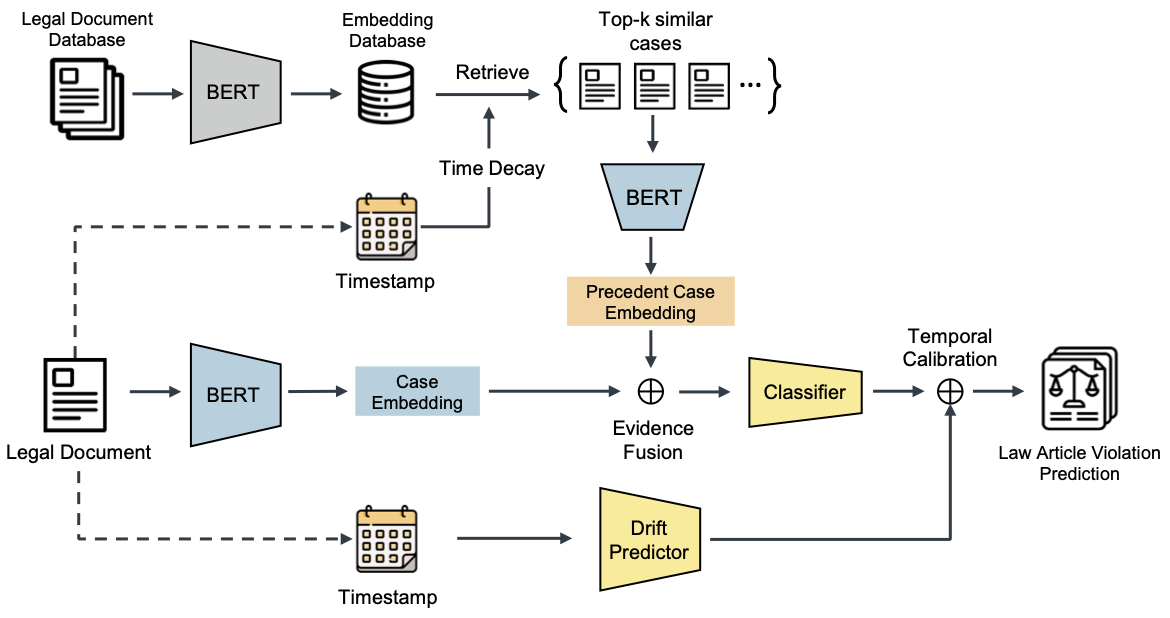

PILOT: Legal Case Outcome Prediction with Case Law

Lang Cao, Zifeng Wang, Cao Xiao, Jimeng Sun NAACL'24 Main Conference, 2024 bibtex / paper / code [NLP Applications] PILOT is a legal case outcome prediction model for case law systems that retrieves relevant precedents and accounts for temporal legal shifts, significantly outperforming prior civil-law-focused approaches. |

|

For more research, please visit my more research page or check out my Google Scholar . |

Education and Experiences |

|

Ubiquant

May 2025 - Aug. 2025 | Shanghai, China AI and Quant Intern Research Focus: Alpha Mining, AI for Quantitative Trading, LLM Agents of Database and Deep Research. |

|

Microsoft Research

Aug. 2024 - June 2025 | Beijing, China Research Intern at Data Knowledge and Intelligence Group Research Focus: Spreadsheet Intelligence, LLM for Table Understanding, General LLM Reasoning. Mentor: Haoyu Dong, Mengyu Zhou |

|

Tsinghua University

Nov. 2024 - Feb. 2025 | Beijing, China Research Assistant at THU-NLP Lab Research Focus: Multi-modal Learning and Reasoning. |

|

Keiji AI

Jan. 2024 - May 2024 | Remote, USA Research Intern Research Focus: AI for Clinical Trials, Medical AI Agents. |

|

iFLYTEK

June 2021 - Aug. 2021 | Hefei, China AI Algorithm Intern at Smart Car Technology R&D Division Focus: AI Applications on Smart Cars. Mentor: Shenan Li |

|

University of Illinois Urbana-Champaign (UIUC)

Doctor of Philosophy in Computer Science Aug. 2025 - Present | Urbana, USA Research Area: Large Language Model;Agent;Reasoning;AI for Health Advisor: Yue Guo Master of Science in Computer Science Aug. 2023 - May 2024 | Urbana, USA Resaerch Assistant at Sunlab Jan. 2023 - May 2024 | Urbana, USA Research Focus: NLP / LLMs Applications for Healthcare and Legal. |

|

Wuhan University of Technology (WUT)

Bachelor of Engineering in Software Engineering Sept. 2018 - June 2022 | Wuhan, China Rank 1st/79, GPA 93.51/100 (3.94/4.0), National Scholarship |

Miscellanea |

Selected Rewards |

|

Invited Talks |

|

Academic Service |

|

|

|

|

© Copyright Lang Cao (last updated February 01, 2026). |